The Digital Frontier: How Emerging Technologies Are Shaping the Future of Human Life

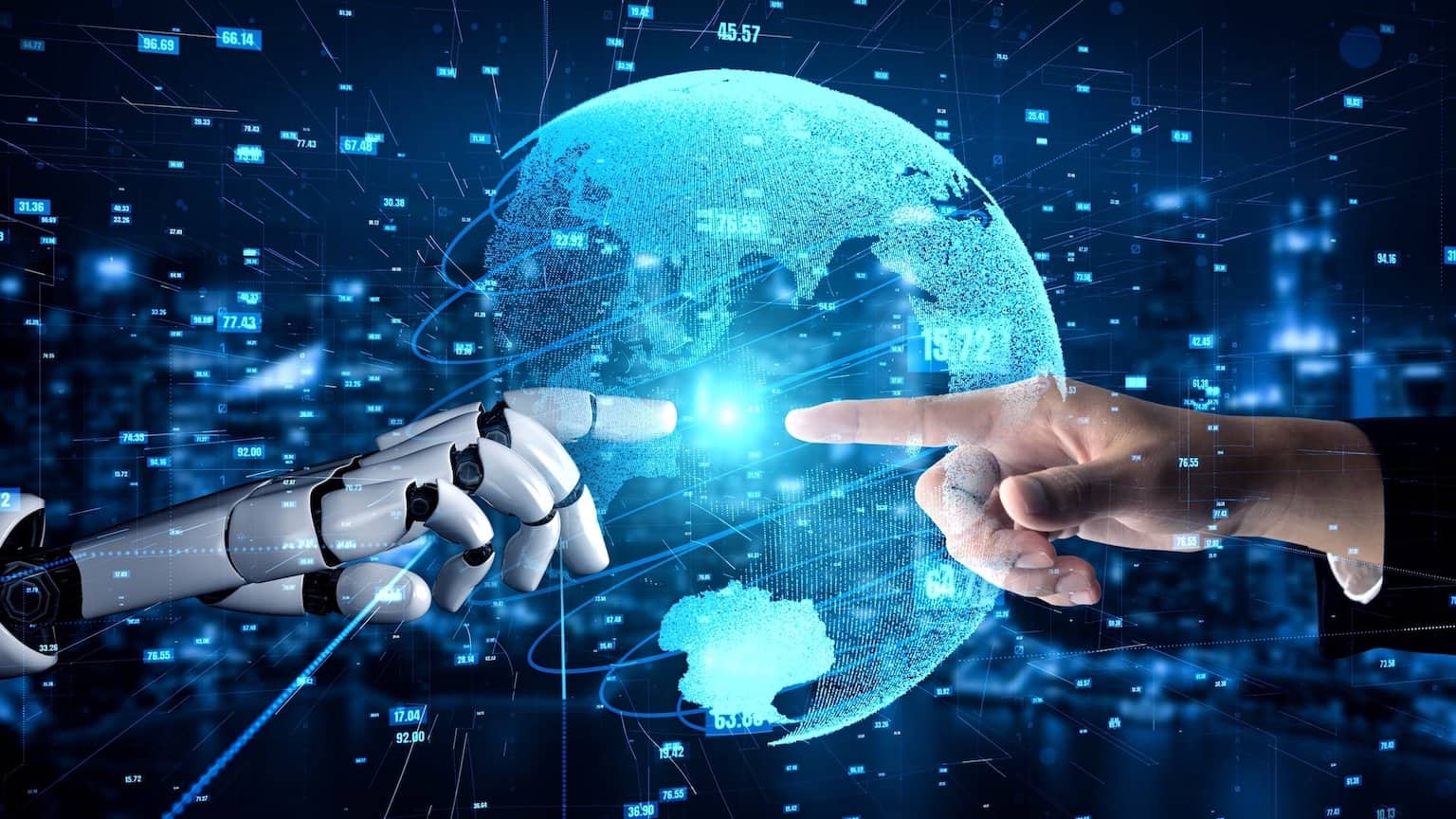

Technology has always been a defining force in human civilization. From the printing press to the internet, each leap forward has transformed the way we live, work, and connect. But in the 21st century, the pace of innovation has accelerated beyond anything humanity has ever seen. Artificial intelligence, quantum computing, blockchain, and biotechnology are not just tools—they are rewriting the very fabric of society.

As the digital frontier expands, the question is no longer whether technology will change the world, but how deeply and quickly those changes will reshape every aspect of our lives.

1. The Age of Artificial Intelligence

Artificial Intelligence (AI) is undoubtedly the engine of the current technological revolution. Once confined to academic research, AI now drives industries, economies, and daily life. Machine learning algorithms power everything from personalized shopping recommendations to medical diagnostics, while generative AI creates art, writes code, and assists professionals in every field.

One of the most transformative aspects of AI is automation. In manufacturing, logistics, and finance, intelligent systems can perform repetitive or dangerous tasks more efficiently than humans. However, this automation also raises concerns about job displacement and the future of human work. The challenge ahead is not to resist AI, but to adapt to it—by redefining education, retraining the workforce, and emphasizing creativity, empathy, and problem-solving skills that machines cannot replicate.

AI ethics has also become a major area of focus. As algorithms increasingly influence decisions in healthcare, law enforcement, and hiring, society must confront questions of fairness, bias, and accountability. The goal is to create systems that are not only intelligent but also transparent and just.

2. Quantum Computing: The Next Great Leap

While AI dominates today’s headlines, quantum computing may define tomorrow’s breakthroughs. Traditional computers process information in bits—ones and zeros. Quantum computers, however, use qubits, which can represent multiple states simultaneously. This allows them to perform complex calculations exponentially faster than classical machines.

Quantum computing could revolutionize fields like cryptography, materials science, and pharmaceutical research. For example, it could enable the discovery of new drugs by simulating molecular interactions at atomic levels, or it could crack encryption systems that currently secure global communications.

Tech giants such as IBM, Google, and Intel are investing heavily in quantum research, racing toward what’s known as quantum advantage—the point where quantum machines outperform the most powerful supercomputers. While still in its early stages, the technology promises to redefine the limits of what’s computationally possible.

3. The Blockchain Revolution: Beyond Cryptocurrency

Blockchain technology first gained global attention through Bitcoin, but its potential extends far beyond digital currency. At its core, blockchain is a decentralized digital ledger that records transactions securely and transparently.

This system eliminates the need for intermediaries like banks or notaries, allowing peer-to-peer interactions that are faster, cheaper, and more trustworthy. Industries such as supply chain management, healthcare, real estate, and finance are now exploring blockchain to increase transparency and security.

In healthcare, for instance, blockchain can be used to securely store patient records, ensuring privacy while enabling authorized access across different institutions. In logistics, it can track goods from origin to destination, reducing fraud and inefficiency.

Smart contracts—self-executing agreements coded on the blockchain—add another layer of innovation. They enable automated, tamper-proof transactions that could one day replace traditional legal or financial intermediaries altogether.

Despite its potential, blockchain still faces scalability and environmental challenges. Yet, as technology evolves, its decentralizing power continues to inspire a vision of a more transparent and equitable digital world.

4. The Internet of Things: A Connected World

The Internet of Things (IoT) is weaving technology into the fabric of everyday life. From smart thermostats and wearable fitness trackers to connected cars and industrial sensors, IoT devices collect and exchange data in real time, making our environments more responsive and efficient.

In smart cities, IoT systems manage traffic flow, monitor air quality, and optimize energy use. In healthcare, wearable devices continuously monitor patients’ vital signs and alert doctors to potential problems. In agriculture, smart sensors track soil conditions and crop health, enabling precision farming that conserves resources and boosts yield.

However, the explosion of connected devices also brings new cybersecurity risks. Each sensor, camera, or smart appliance represents a potential entry point for hackers. As IoT adoption grows, ensuring data security and privacy will become one of the most urgent challenges in the digital era.

5. Biotechnology and the Merging of Tech with Biology

Technology is no longer limited to machines—it’s merging with biology itself. Advances in biotechnology and bioengineering are enabling scientists to manipulate DNA, grow synthetic organs, and even enhance human abilities.

The development of CRISPR-Cas9, a gene-editing tool, has opened the door to correcting genetic defects and preventing hereditary diseases. Meanwhile, wearable and implantable medical devices can monitor or regulate bodily functions in real time, bridging the gap between human biology and digital technology.

Perhaps the most ambitious frontier is neurotechnology. Companies like Neuralink are developing brain-computer interfaces (BCIs) that could allow humans to communicate directly with machines. Such innovations could revolutionize healthcare by helping people with paralysis regain mobility—or even enable entirely new forms of human-computer interaction.

As with all powerful technologies, bio-digital convergence raises profound ethical questions. How much should we enhance the human body? Where do we draw the line between healing and augmentation? Society must navigate these dilemmas carefully to ensure that progress serves humanity, not undermines it.

6. The Cloud and Edge Computing Era

Behind nearly every modern technology lies the cloud—a global network of data centers that store and process information. Cloud computing has made it possible for businesses and individuals to access powerful computing resources on demand, enabling everything from streaming services to AI applications.

But as data volumes grow exponentially, a new paradigm is emerging: edge computing. Instead of sending all data to centralized cloud servers, edge computing processes information closer to where it’s generated—on local devices or nearby servers. This reduces latency, improves efficiency, and enables real-time decision-making.

Edge computing is essential for technologies like autonomous vehicles, smart factories, and augmented reality, where milliseconds can make a difference. Together, cloud and edge computing form the invisible backbone of our hyperconnected world.

7. Cybersecurity: Protecting the Digital Future

As technology becomes more integrated into every aspect of life, cybersecurity has become one of the most critical issues of our time. Data breaches, ransomware attacks, and identity theft are growing more sophisticated, targeting individuals, corporations, and even governments.

Protecting digital infrastructure requires constant vigilance and innovation. Emerging solutions include AI-driven threat detection, quantum encryption, and zero-trust security frameworks. However, cybersecurity is not just a technical challenge—it’s a human one. Education, awareness, and responsible digital behavior are essential to building a safer online ecosystem.

8. Looking Ahead: Ethics and Human-Centered Technology

As we move deeper into the digital age, the biggest question is not what technology can do, but what it should do. Innovation must be guided by ethical principles that prioritize privacy, inclusivity, and human well-being.

Policymakers, tech companies, and citizens must work together to ensure that emerging technologies serve society’s broader goals—reducing inequality, protecting the environment, and improving quality of life. The future of technology should not be defined by gadgets or algorithms alone, but by how it empowers humanity to solve its greatest challenges.

Conclusion

The technological revolution is not a distant event—it’s unfolding right now, in real time, across every corner of the globe. Artificial intelligence, quantum computing, blockchain, IoT, and biotechnology are reshaping economies and redefining what it means to be human.

Yet amid this rapid change, one principle remains constant: technology is a reflection of us. Its power, promise, and peril depend on how we choose to wield it. If guided by wisdom, empathy, and a commitment to shared progress, the digital frontier can usher in a future that’s not just smarter—but more humane.